ForgeOps documentation

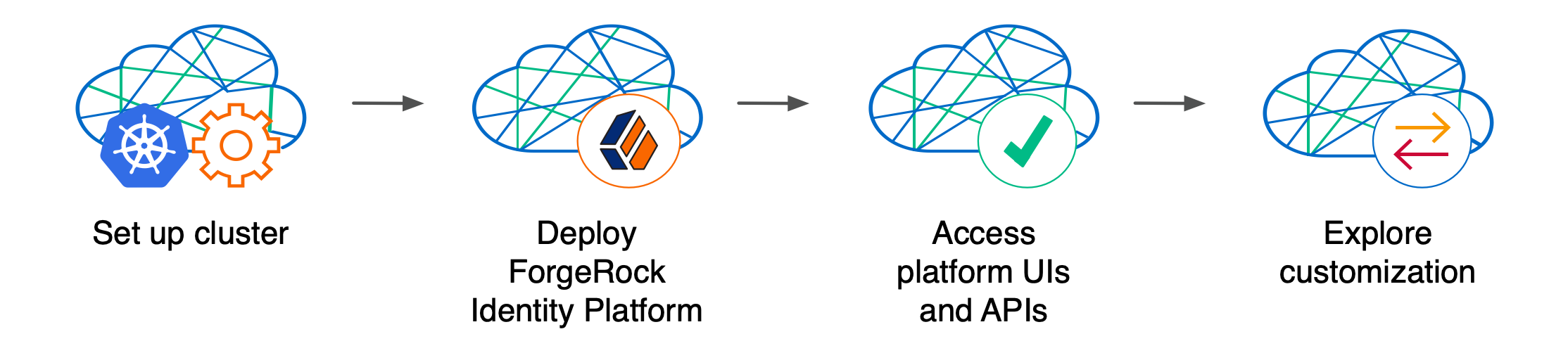

ForgeRock provides a number of resources to help you get started in the cloud. These resources demonstrate how to deploy the Ping Identity Platform on Kubernetes.

The Ping Identity Platform serves as the basis for our simple and comprehensive identity and access management solution. We help our customers deepen their relationships with their customers and improve the productivity and connectivity of their employees and partners. For more information about ForgeRock and about the platform, refer to https://www.forgerock.com.

Start here

ForgeRock provides several resources to help you get started in the cloud. These resources demonstrate how to deploy the Ping Identity Platform on Kubernetes. Before you proceed, review the following precautions:

-

Deploying ForgeRock software in a containerized environment requires advanced proficiency in many technologies. Refer to Assess Your Skill Level for details.

-

If you don’t have experience with complex Kubernetes deployments, then either engage a certified ForgeRock consulting partner or deploy the platform on traditional architecture.

-

Don’t deploy ForgeRock software in Kubernetes in production until you have successfully deployed and tested the software in a non-production Kubernetes environment.

For information about obtaining support for Ping Identity Platform software, refer to Support from ForgeRock.

|

ForgeRock only offers ForgeRock software or services to legal entities that have entered into a binding license agreement with ForgeRock. When you install ForgeRock’s Docker images, you agree either that: 1) you are an authorized user of a ForgeRock customer that has entered into a license agreement with ForgeRock governing your use of the ForgeRock software; or 2) your use of the ForgeRock software is subject to the ForgeRock Subscription License Agreement. |

Introducing the CDK and CDM

The forgeops repository and DevOps documentation address a range of our customers' typical business needs. The repository contains artifacts for two primary resources to help you with cloud deployment:

-

Cloud Developer’s Kit (CDK). The CDK is a minimal sample deployment for development purposes. Developers deploy the CDK, and then access AM’s and IDM’s admin UIs and REST APIs to configure the platform and build customized Docker images for the platform.

-

Cloud Deployment Model (CDM). The CDM is a reference implementation for ForgeRock cloud deployments. You can get a sample Ping Identity Platform deployment up and running in the cloud quickly using the CDM. After deploying the CDM, you can use it to explore how you might configure your Kubernetes cluster before you deploy the platform in production.

The CDM is a robust sample deployment for demonstration and exploration purposes only. It is not a production deployment.

| CDK | CDM | |

|---|---|---|

Fully integrated AM, IDM, and DS installations |

✔ |

✔ |

Randomly generated secrets |

✔ |

✔ |

Resource requirement |

Namespace in a GKE, EKS, AKS, or Minikube cluster |

GKE, EKS, or AKS cluster |

Can run on Minikube |

✔ |

|

Multi-zone high availability |

✔ |

|

Replicated directory services |

✔ |

|

Ingress configuration |

✔ |

|

Certificate management |

✔ |

|

Prometheus monitoring, Grafana reporting, and alert management |

✔ |

ForgeRock’s DevOps documentation helps you deploy the CDK and CDM:

-

CDK documentation. Tells you how to install the CDK, modify the AM and IDM configurations, and create customized Docker images for the Ping Identity Platform.

-

CDM documentation. Tells you how to quickly create a Kubernetes cluster on Google Cloud, Amazon Web Services (AWS), or Microsoft Azure, install the Ping Identity Platform, and access components in the deployment.

-

How-tos. Contains how-tos for customizing monitoring, setting alerts, backing up and restoring directory data, modifying CDM’s default security configuration, and running lightweight benchmarks to test DS, AM, and IDM performance.

-

ForgeOps 7.4 release notes. Keeps you up-to-date with the latest changes to the

forgeopsrepository.

Try out the CDK and the CDM

Before you start planning a production deployment, deploy either the CDK or the CDM—or both. If you’re new to Kubernetes, or new to the Ping Identity Platform, deploying these resources is a great way to learn. When you’ve finished deploying them, you’ll have sandboxes suitable for exploring ForgeRock cloud deployment.

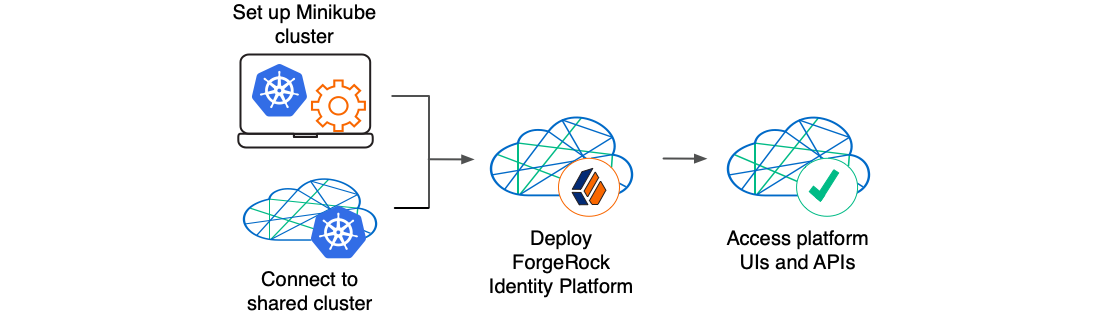

Deploy the CDK

The CDK is a minimal sample deployment of the Ping Identity Platform. If you have access to a cluster on Google Cloud, EKS, or AKS, you can deploy the CDK in a namespace on your cluster. You can also deploy the CDK locally in a standalone Minikube environment, and when you’re done, you’ll have a local Kubernetes cluster with the platform orchestrated on it.

Prerequisite technologies and skills:

More information:

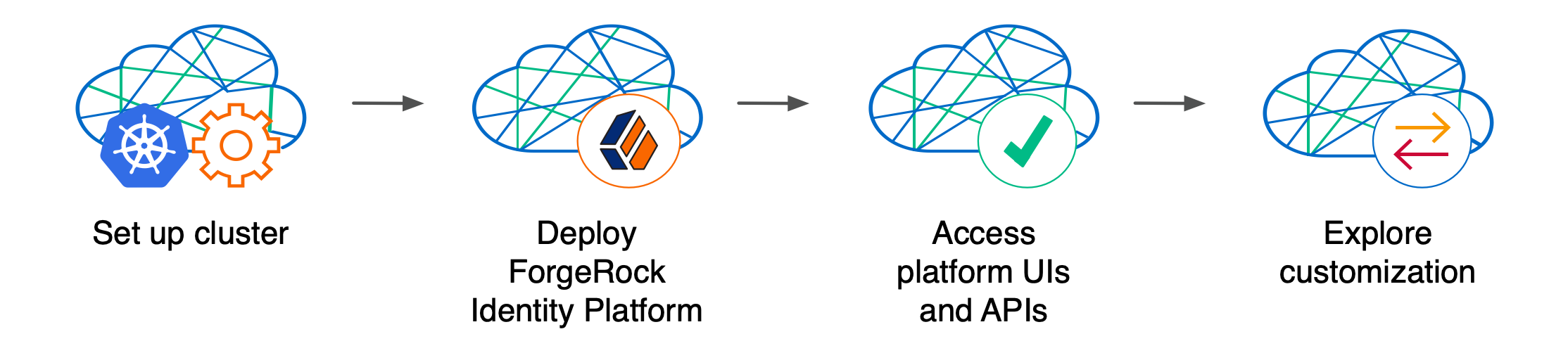

Deploy the CDM

Deploy the CDM on Google Cloud, AWS, or Microsoft Azure to quickly spin up the platform for demonstration purposes. You’ll get a feel for what it’s like to deploy the platform on a Kubernetes cluster in the cloud. When you’re done, you won’t have a production-quality deployment. But you will have a robust, reference implementation of the platform.

After you get the CDM up and running, you can use it to test deployment customizations—options that you might want to use in production, but are not part of the CDM. Examples include, but are not limited to:

-

Running lightweight benchmark tests

-

Making backups of CDM data, and restoring the data

-

Securing TLS with a certificate that’s dynamically obtained from Let’s Encrypt

-

Using an ingress controller other than the Ingress-NGINX controller

-

Resizing the cluster to meet your business requirements

-

Configuring Alert Manager to issue alerts when usage thresholds have been reached

Prerequisite technologies and skills:

More information:

Build your own service

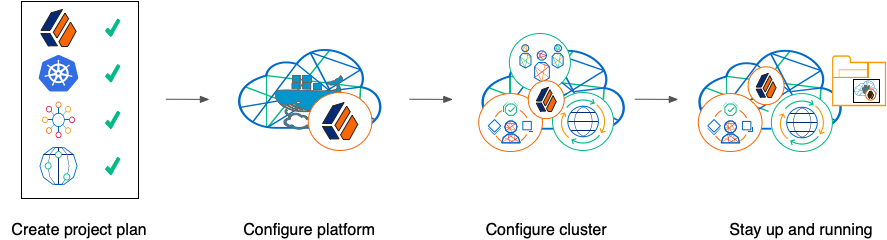

Perform the following activities to customize, deploy, and maintain a production Ping Identity Platform implementation in the cloud:

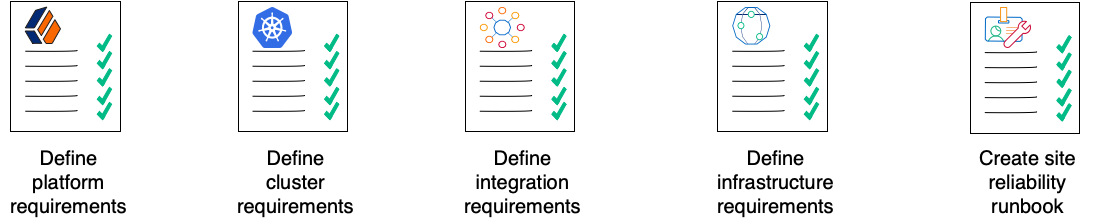

Create a project plan

After you’ve spent some time exploring the CDK and CDM, you’re ready to define requirements for your production deployment. Remember, the CDM is not a production deployment. Use the CDM to explore deployment customizations, and incorporate the lessons you’ve learned as you build your own production service.

Analyze your business requirements and define how the Ping Identity Platform needs to be configured to meet your needs. Identify systems to be integrated with the platform, such as identity databases and applications, and plan to perform those integrations. Assess and specify your deployment infrastructure requirements, such as backup, system monitoring, Git repository management, CI/CD, quality assurance, security, and load testing.

Be sure to do the following when you transition to a production environment:

-

Obtain and use certificates from an established certificate authority.

-

Create and test your backup plan.

-

Use a working production-ready FQDN.

-

Implement monitoring and alerting utilities.

Prerequisite technologies and skills:

More information:

Configure the platform

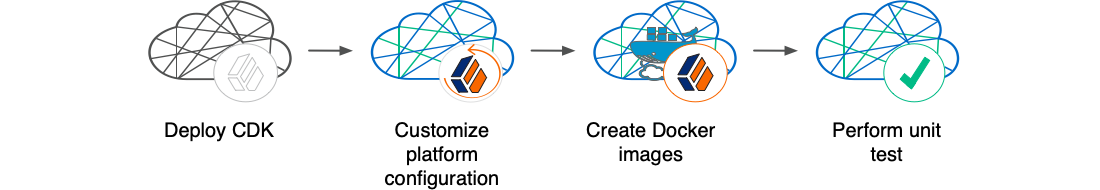

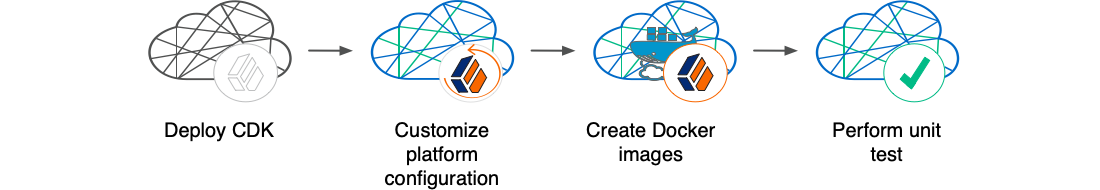

With your project plan defined, you’re ready to configure the Ping Identity Platform to meet the plan’s requirements. Install the CDK on your developers' computers. Configure AM and IDM. If needed, include integrations with external applications in the configuration. Iteratively unit test your configuration as you modify it. Build customized Docker images that contain the configuration.

Prerequisite technologies and skills:

More information:

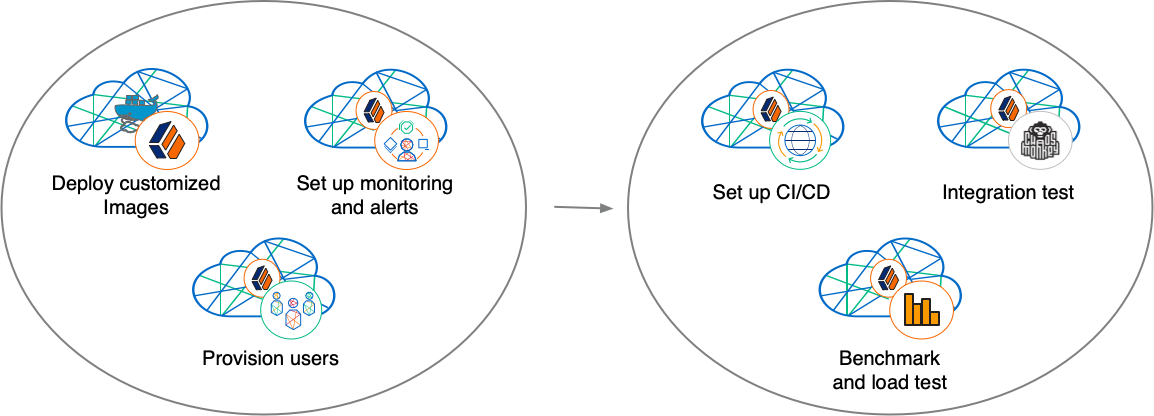

Configure your cluster

With your project plan defined, you’re ready to configure a Kubernetes cluster that meets the requirements defined in the plan. Install the platform using the customized Docker images developed in Configure the platform. Provision the ForgeRock identity repository with users, groups, and other identity data. Load test your deployment, and then size your cluster to meet service level agreements. Perform integration tests. Harden your deployment. Set up CI/CD for your deployment. Create monitoring alerts so that your site reliability engineers are notified when the system reaches thresholds that affect your SLAs. Implement database backup and test database restore. Simulate failures while under load to make sure your deployment can handle them.

Prerequisite technologies and skills:

More information:

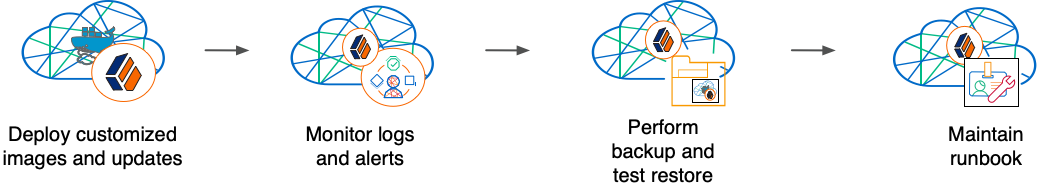

Stay up and running

By now, you’ve configured the platform, configured a Kubernetes cluster, and deployed the platform with your customized configuration. Run your Ping Identity Platform deployment in your cluster, continually monitoring it for performance and reliability. Take backups as needed.

Prerequisite technologies and skills:

More information:

Assess your skill level

Benchmarking and load testing

I can:

-

Write performance tests, using tools such as Gatling and Apache JMeter, to ensure that the system meets required performance thresholds and service level agreements (SLAs).

-

Resize a Kubernetes cluster, taking into account performance test results, thresholds, and SLAs.

-

Run Linux performance monitoring utilities, such as top.

CI/CD for cloud deployments

I have experience:

-

Designing and implementing a CI/CD process for a cloud-based deployment running in production.

-

Using a cloud CI/CD tool, such as Tekton, Google Cloud Build, Codefresh, AWS CloudFormation, or Jenkins, to implement a CI/CD process for a cloud-based deployment running in production.

-

Integrating GitOps into a CI/CD process.

Docker

I know how to:

-

Write Dockerfiles.

-

Create Docker images, and push them to a private Docker registry.

-

Pull and run images from a private Docker registry.

I understand:

-

The concepts of Docker layers, and building images based on other Docker images using the FROM instruction.

-

The difference between the COPY and ADD instructions in a Dockerfile.

Git

I know how to:

-

Use a Git repository collaboration framework, such as GitHub, GitLab, or Bitbucket Server.

-

Perform common Git operations, such as cloning and forking repositories, branching, committing changes, submitting pull requests, merging, viewing logs, and so forth.

External application and database integration

I have expertise in:

-

AM policy agents.

-

Configuring AM policies.

-

Synchronizing and reconciling identity data using IDM.

-

Managing cloud databases.

-

Connecting Ping Identity Platform components to cloud databases.

Ping Identity Platform

I have:

-

Attended ForgeRock University training courses.

-

Deployed the Ping Identity Platform in production, and kept the deployment highly available.

-

Configured DS replication.

-

Passed the ForgeRock Certified Access Management and ForgeRock Certified Identity Management exams (highly recommended).

Google Cloud, AWS, or Azure (basic)

I can:

-

Use the graphical user interface for Google Cloud, AWS, or Azure to navigate, browse, create, and remove Kubernetes clusters.

-

Use the cloud provider’s tools to monitor a Kubernetes cluster.

-

Use the command user interface for Google Cloud, AWS, or Azure.

-

Administer cloud storage.

Google Cloud, AWS, or Azure (expert)

In addition to the basic skills for Google Cloud, AWS, or Azure, I can

-

Read the cluster creation shell scripts in the

forgeopsrepository to see how the CDM cluster is configured. -

Create and manage a Kubernetes cluster using an infrastructure-as-code tool such as Terraform, AWS CloudFormation, or Pulumi.

-

Configure multi-zone and multi-region Kubernetes clusters.

-

Configure cloud-provider identity and access management (IAM).

-

Configure virtual private clouds (VPCs) and VPC networking.

-

Manage keys in the cloud using a service such as Google Key Management Service (KMS), Amazon KMS, or Azure Key Vault.

-

Configure and manage DNS domains on Google Cloud, AWS, or Azure.

-

Troubleshoot a deployment running in the cloud using the cloud provider’s tools, such as Google Stackdriver, Amazon CloudWatch, or Azure Monitor.

-

Integrate a deployment with certificate management tools, such as cert-manager and Let’s Encrypt.

-

Integrate a deployment with monitoring and alerting tools, such as Prometheus and Alertmanager.

I have obtained one of the following certifications (highly recommended):

-

Google Certified Associate Cloud Engineer Certification.

-

AWS professional-level or associate-level certifications (multiple).

-

Azure Administrator.

Integration testing

I can:

-

Automate QA testing using a test automation framework.

-

Design a chaos engineering test for a cloud-based deployment running in production.

-

Use chaos engineering testing tools, such as Chaos Monkey.

Kubernetes (basic)

I’ve gone through the tutorials at kubernetes.io, and am able to:

-

Use the kubectl command to determine the status of all the pods in a namespace, and to determine whether pods are operational.

-

Use the kubectl describe pod command to perform basic troubleshooting on pods that are not operational.

-

Use the kubectl command to obtain information about namespaces, secrets, deployments, and stateful sets.

-

Use the kubectl command to manage persistent volumes and persistent volume claims.

Kubernetes (expert)

In addition to the basic skills for Kubernetes, I have:

-

Configured role-based access to cloud resources.

-

Configured Kubernetes objects, such as deployments and stateful sets.

-

Configured Kubernetes ingresses.

-

Configured Kubernetes resources using Kustomize.

-

Passed the Cloud Native Certified Kubernetes Administrator exam (highly recommended).

Kubernetes backup and restore

I know how to:

-

Schedule backups of Kubernetes persistent volumes on volume snapshots.

-

Restore Kubernetes persistent volumes from volume snapshots.

I have experience with one or more of the following:

-

Volume snapshots on Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), or Azure Kubernetes Service (AKS)

-

A third-party Kubernetes backup and restore product, such as Velero, Kasten K10, TrilioVault, Commvault, or Portworx PX-Backup.

Project planning and management for cloud deployments

I have planned and managed:

-

A production deployment in the cloud.

-

A production deployment of Ping Identity Platform.

Security and hardening for cloud deployments

I can:

-

Harden a Ping Identity Platform deployment.

-

Configure TLS, including mutual TLS, for a multi-tiered cloud deployment.

-

Configure cloud identity and access management and role-based access control for a production deployment.

-

Configure encryption for a cloud deployment.

-

Configure Kubernetes network security policies.

-

Configure private Kubernetes networks, deploying bastion servers as needed.

-

Undertake threat modeling exercises.

-

Scan Docker images to ensure container security.

-

Configure and use private Docker container registries.

Site reliability engineering for cloud deployments

I can:

-

Manage multi-zone and multi-region deployments.

-

Implement DS backup and restore in order to recover from a database failure.

-

Manage cloud disk availability issues.

-

Analyze monitoring output and alerts, and respond should a failure occur.

-

Obtain logs from all the software components in my deployment.

-

Follow the cloud provider’s recommendations for patching and upgrading software in my deployment.

-

Implement an upgrade scheme, such as blue/green or rolling upgrades, in my deployment.

-

Create a Site Reliability Runbook for the deployment, documenting all the procedures to be followed and other relevant information.

-

Follow all the procedures in the project’s Site Reliability Runbook, and revise the runbook if it becomes out-of-date.

Support from ForgeRock

This appendix contains information about support options for the ForgeOps Cloud Developer’s Kit, the ForgeOps Cloud Deployment Model, and the Ping Identity Platform.

ForgeOps (ForgeRock DevOps) support

ForgeRock has developed artifacts in the forgeops and forgeops-extras Git repositories for the purpose of deploying the Ping Identity Platform in the cloud. The companion ForgeOps documentation provides examples, including the ForgeOps Cloud Developer’s Kit (CDK) and the ForgeOps Cloud Deployment Model (CDM), to help you get started.

These artifacts and documentation are provided on an "as is" basis. ForgeRock does not guarantee the individual success developers may have in implementing the code on their development platforms or in production configurations.

Licensing

ForgeRock only offers ForgeRock software or services to legal entities that have entered into a binding license agreement with ForgeRock. When you install ForgeRock’s Docker images, you agree either that: 1) you are an authorized user of a ForgeRock customer that has entered into a license agreement with ForgeRock governing your use of the ForgeRock software; or 2) your use of the ForgeRock software is subject to the ForgeRock Subscription License Agreement.

Support

ForgeRock provides support for the following resources:

-

Artifacts in the forgeops Git repository:

-

Files used to build Docker images for the Ping Identity Platform:

-

Dockerfiles

-

Scripts and configuration files incorporated into ForgeRock’s Docker images

-

Canonical configuration profiles for the platform

-

-

Kustomize bases and overlays

-

For more information about support for specific directories and files in the

forgeops repository, refer to the repository reference.

ForgeRock provides support for the Ping Identity Platform. For supported components, containers, and Java versions, refer to the following:

Support limitations

ForgeRock provides no support for the following:

-

Artifacts in the forgeops-extras repository. For more information about support for specific directories and files in the

forgeops-extrasrepository, refer to the repository reference. -

Artifacts other than Dockerfiles, Kustomize bases, and Kustomize overlays in the forgeops Git repository. Examples include scripts, example configurations, and so forth.

-

Non-ForgeRock infrastructure. Examples include Docker, Kubernetes, Google Cloud Platform, Amazon Web Services, Microsoft Azure, and so forth.

-

Non-ForgeRock software. Examples include Java, Apache Tomcat, NGINX, Apache HTTP Server, Certificate Manager, Prometheus, and so forth.

-

Deployments that deviate from the published CDK and CDM architecture. Deployments that do not include the following architectural features are not supported:

-

PingAM (AM) and PingIDM (IDM) are integrated and deployed together in a Kubernetes cluster.

-

IDM login is integrated with AM.

-

AM uses PingDS (DS) as its data repository.

-

IDM uses DS as its repository.

-

-

ForgeRock publishes reference Docker images for testing and development, but these images should not be used in production. For production deployments, it is recommended that customers build and run containers using a supported operating system and all required software dependencies. Additionally, to help ensure interoperability across container images and the ForgeOps tools, Docker images must be built using the Dockerfile templates as described here.

Third-party Kubernetes services

The ForgeOps reference tools are provided for use with Google Kubernetes Engine, Amazon Elastic Kubernetes Service, and Microsoft Azure Kubernetes Service. (ForgeRock supports running the identity platform on IBM RedHat OpenShift but does not provide the reference tools for IBM RedHat OpenShift.)

ForgeRock supports running the platform on Kubernetes. ForgeRock does not support Kubernetes itself. You must have a support contract in place with your Kubernetes vendor to resolve infrastructure issues. To avoid any misunderstandings, it must be clear that ForgeRock cannot troubleshoot underlying Kubernetes issues.

Modifications to ForgeRock’s deployment assets may be required in order to adapt the platform to your Kubernetes implementation. For example, ingress routes, storage classes, NAT gateways, etc., might need to be modified. Making the modifications requires competency in Kubernetes, and familiarity with your chosen distribution.

Documentation access

ForgeRock publishes comprehensive documentation online:

-

The ForgeRock Knowledge Base offers a large and increasing number of up-to-date, practical articles that help you deploy and manage ForgeRock software.

While many articles are visible to community members, ForgeRock customers have access to much more, including advanced information for customers using ForgeRock software in a mission-critical capacity.

-

ForgeRock developer documentation, such as this site, aims to be technically accurate with respect to the sample that is documented. It is visible to everyone.

Problem reports and information requests

If you are a named customer Support Contact, contact ForgeRock using the Customer Support Portal to request information, or report a problem with Dockerfiles, Kustomize bases, or Kustomize overlays in the CDK or the CDM.

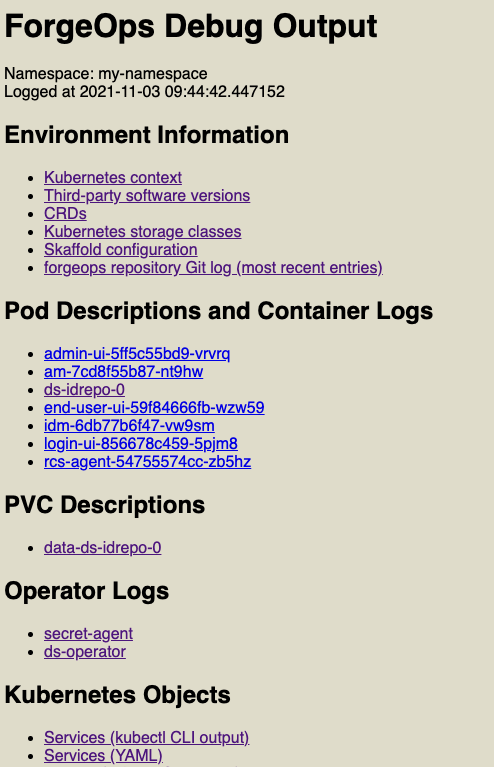

When requesting help with a problem, include the following information:

-

Description of the problem, including when the problem occurs and its impact on your operation.

-

Steps to reproduce the problem.

If the problem occurs on a Kubernetes system other than Minikube, GKE, EKS, or AKS, we might ask you to reproduce the problem on one of those.

-

HTML output from the debug-logs command. For more information, refer to Kubernetes logs and other diagnostics.

Suggestions for fixes and enhancements to unsupported artifacts

ForgeRock greatly appreciates suggestions for fixes and enhancements to unsupported artifacts in the forgeops and forgeops-extras repositories.

If you would like to report a problem with or make an enhancement request for an unsupported artifact in either repository, create a GitHub issue on the repository.

Contact information

ForgeRock provides support services, professional services, training through ForgeRock University, and partner services to assist you in setting up and maintaining your deployments. For a general overview of these services, refer to https://www.forgerock.com.

ForgeRock has staff members around the globe who support our international customers and partners. For details on ForgeRock’s support offering, including support plans and service-level agreements (SLAs), visit https://www.forgerock.com/support.

About the forgeops repository

Use ForgeRock’s forgeops repository

to customize and deploy the Ping Identity Platform on a Kubernetes cluster.

The repository contains files needed for customizing and deploying the Ping Identity Platform on a Kubernetes cluster:

-

Files used to build Docker images for the Ping Identity Platform:

-

Dockerfiles

-

Scripts and configuration files incorporated into ForgeRock’s Docker images

-

Canonical configuration profiles for the platform

-

-

Kustomize bases and overlays

In addition, the repository contains numerous utility scripts and sample files. The scripts and samples are useful for:

-

Deploying ForgeRock’s CDK and CDM quickly and easily

-

Exploring monitoring, alerts, and security customization

-

Modeling a CI/CD solution for cloud deployment

Refer to Repository reference for information about the files in the repository, recommendations about how to work with them, and the support status for the files.

Repository updates

New forgeops repository features become available in the release/7.4-20240805

branch of the repository from time to time.

When you start working with the forgeops repository, clone the repository.

Depending on your organization’s setup, you’ll clone the repository either from

ForgeRock’s public repository on GitHub, or from a fork. See

Git clone or Git fork? for more information.

Then, check out the release/7.4-20240805 branch and create a working branch. For

example:

$ git checkout release/7.4-20240805 $ git checkout -b my-working-branch

ForgeRock recommends that you regularly incorporate updates to the

release/7.4-20240805 into your working branch:

-

Get emails or subscribe to the ForgeOps RSS feed to be notified when there have been updates to ForgeOps 7.4.

-

Pull new commits in the

release/7.4-20240805branch into your clone’srelease/7.4-20240805branch. -

Rebase the commits from the new branch into your working branch in your

forgeopsrepository clone.

It’s important to understand the impact of rebasing changes from the forgeops

repository into your branches. Repository reference provides advice about

which files in the forgeops repository to change, which files not to change,

and what to look out for when you rebase. Follow the advice in

Repository reference to reduce merge conflicts, and to better understand

how to resolve them when you rebase your working branch with updates that

ForgeRock has made to the release/7.4-20240805 branch.

Repository reference

For more information about support for the forgeops repository, see

Support from ForgeRock.

Directories

bin

Example scripts you can use or model for a variety of deployment tasks.

Recommendation: Don’t modify the files in this directory. If you want to add

your own scripts to the forgeops repository, create a subdirectory under

bin, and store your scripts there.

Support Status: Sample files. Not supported by ForgeRock.

charts

Helm charts.

Recommendation: Don’t modify the files in this directory. If you want to update a values.yaml file, copy the file to a new file, and make changes there.

Support Status: Technology preview. Not supported by ForgeRock.

cluster

Example script that automates Minikube cluster creation.

Recommendation: Don’t modify the files in this directory.

Support Status: Sample file. Not supported by ForgeRock.

docker

Contains three types of files needed to build Docker images for the Ping Identity Platform: Dockerfiles, support files that go into Docker images, and configuration profiles.

Dockerfiles

Common deployment customizations require modifications to Dockerfiles in the docker directory.

Recommendation: Expect to encounter merge conflicts when you rebase changes from ForgeRock into your branches. Be sure to track changes you’ve made to Dockerfiles, so that you’re prepared to resolve merge conflicts after a rebase.

Support Status: Dockerfiles. Support is available from ForgeRock.

Support Files Referenced by Dockerfiles

When customizing ForgeRock’s default deployments, you might need to add files to the docker directory. For example, to customize the AM WAR file, you might need to add plugin JAR files, user interface customization files, or image files.

Recommendation: If you only add new files to the docker directory, you should not encounter merge conflicts when you rebase changes from ForgeRock into your branches. However, if you need to modify any files from ForgeRock, you might encounter merge conflicts. Be sure to track changes you’ve made to any files in the docker directory, so that you’re prepared to resolve merge conflicts after a rebase.

Support Status:

Scripts and other files from ForgeRock that are incorporated into Docker images for the Ping Identity Platform: Support is available from ForgeRock.

User customizations that are incorporated into custom Docker images for the Ping Identity Platform: Support is not available from ForgeRock.

Configuration Profiles

Add your own configuration profiles to the docker directory using the

export command. Do not modify ForgeRock’s internal-use only

idm-only and ig-only configuration profiles.

Recommendation: You should not encounter merge conflicts when you rebase changes from ForgeRock into your branches.

Support Status: Configuration profiles. Support is available from ForgeRock.

etc

Files used to support several examples, including the CDM.

Recommendation: Don’t modify the files in this directory (or its subdirectories). If you want to use CDM automated cluster creation as a model or starting point for your own automated cluster creation, then create your own subdirectories under etc, and copy the files you want to model into the subdirectories.

Support Status: Sample files. Not supported by ForgeRock.

kustomize

Artifacts for orchestrating the default deployment of Ping Identity Platform using Kustomize.

|

The forgeops install command does not use the |

Support Status: Kustomize bases and overlays. Support is available from ForgeRock.

legacy-docs

Documentation for deploying the Ping Identity Platform using DevOps techniques.

Includes documentation for supported and deprecated versions of the forgeops

repository.

Recommendation: Don’t modify the files in this directory.

Support Status:

Documentation for supported versions of the forgeops repository:

Support is available from ForgeRock.

Documentation for deprecated versions of the forgeops repository:

Not supported by ForgeRock.

Git clone or Git fork?

For the simplest use cases—a single user in an organization installing

the CDK or CDM for a proof of concept, or exploration of the

platform—cloning ForgeRock’s public forgeops repository from

GitHub provides a quick and adequate way to access the repository.

If, however, your use case is more complex, you might want to fork the

forgeops repository, and use the fork as your common upstream repository. For

example:

-

Multiple users in your organization need to access a common version of the repository and share changes made by other users.

-

Your organization plans to incorporate

forgeopsrepository changes from ForgeRock. -

Your organization wants to use pull requests when making repository updates.

If you’ve forked the forgeops repository:

-

You’ll need to synchronize your fork with ForgeRock’s public repository on GitHub when ForgeRock releases a new release tag.

-

Your users will need to clone your fork before they start working instead of cloning the public

forgeopsrepository on GitHub. Because procedures in the CDK documentation and the CDM documentation tell users to clone the public repository, you’ll need to make sure your users follow different procedures to clone the forks instead. -

The steps for initially obtaining and updating your repository clone will differ from the steps provided in the documentation. You’ll need to let users know how to work with the fork as the upstream instead of following the steps in the documentation.

About the forgeops-extras repository

Use ForgeRock’s forgeops-extras repository to create sample Kubernetes clusters in which you can deploy the Ping Identity Platform.

Repository reference

For more information about support for the forgeops-extras repository, see

Support from ForgeRock.

Directories

terraform

Example scripts and artifacts that automate CDM cluster creation and deletion.

Recommendation: Don’t modify the files in this directory. If you want to add

your own cluster creation support files to the forgeops repository, copy the

terraform.tfvars file to a new file, and make changes there.

Support Status: Sample files. Not supported by ForgeRock.

Git clone or Git fork?

For the simplest use cases—a single user in an organization installing

the CDK or CDM for a proof of concept, or exploration of the

platform—cloning ForgeRock’s public forgeops-extras repository

from GitHub provides a quick and adequate way to access the repository.

If, however, your use case is more complex, you might want to fork the

forgeops-extras repository, and use the fork as your common upstream

repository. For example:

-

Multiple users in your organization need to access a common version of the repository and share changes made by other users.

-

Your organization plans to incorporate

forgeops-extrasrepository changes from ForgeRock. -

Your organization wants to use pull requests when making repository updates.

If you’ve forked the forgeops-extras repository:

-

You’ll need to synchronize your fork with ForgeRock’s public repository on GitHub when ForgeRock releases a new release tag.

-

Your users will need to clone your fork before they start working instead of cloning the public

forgeops-extrasrepository on GitHub. Because procedures in the documentation tell users to clone the public repository, you’ll need to make sure your users follow different procedures to clone the forks instead. -

The steps for initially obtaining and updating your repository clone will differ from the steps provided in the documentation. You’ll need to let users know how to work with the fork as the upstream instead of following the steps in the documentation.

CDK documentation

The CDK is a minimal sample deployment of the Ping Identity Platform on Kubernetes that you can use for demonstration and development purposes. It includes fully integrated AM, IDM, and DS installations, and randomly generated secrets.

If you have access to a cluster on Google Cloud, EKS, or AKS, you can deploy the CDK in a namespace on your cluster. You can also deploy the CDK locally in a standalone Minikube environment, and when you’re done, you’ll have a local Kubernetes cluster with the platform orchestrated on it.

About the Cloud Developer’s Kit

The CDK is a minimal sample deployment of the Ping Identity Platform on Kubernetes that you can use for demonstration and development purposes. It includes fully integrated AM, IDM, and DS installations, and randomly generated secrets.

CDK deployments orchestrate a working version of the Ping Identity Platform on Kubernetes. They also let you build and run customized Docker images for the platform.

This documentation describes how to deploy the CDK, and then use it to create and test customized Docker images containing your custom AM and IDM configurations.

Before deploying the platform in production, you must customize it using the CDK. To better understand how this activity fits into the overall deployment process, see Configure the Platform.

Containerization

The CDK uses Docker for containerization. Start with evaluation-only Docker images from ForgeRock that include canonical configurations for AM and IDM. Then, customize the configurations, and create your own images that include your customized configurations.

For more information about Docker images for the Ping Identity Platform, see About custom images.

Orchestration

The CDK uses Kubernetes for container orchestration. The CDK has been tested on the following Kubernetes implementations:

-

Single-node deployment suitable for demonstrations, proofs of concept, and development:

-

Cloud-based Kubernetes orchestration frameworks suitable for development and production deployment of the platform:

Next step

CDK architecture

You deploy the CDK to get the Ping Identity Platform up and running on Kubernetes. CDK deployments are useful for demonstrations and proofs of concept. They’re also intended for development—building custom Docker images for the platform.

|

Do not use the CDK as the basis for a production deployment of the Ping Identity Platform. |

Before you can deploy the CDK, you must have:

-

Access to a Kubernetes cluster with the Ingress-NGINX controller deployed on it.

-

Access to a namespace in the cluster.

-

Third-party software installed in your local environment, as described in the Setup section that pertains to your cluster type.

This diagram shows the CDK components:

The forgeops install command deploys the CDK in a Kubernetes cluster:

-

Installs Docker images for the platform specified in the image defaulter. Initially, the image defaulter specifies the ForgeOps-provided Docker images for ForgeOps 7.4 release, available from the public registry. These images use ForgeRock’s canonical configurations for AM and IDM.

-

Installs additional software as needed[1]:

After you’ve deployed the CDK, you can access AM and IDM UIs and REST APIs to customize the Ping Identity Platform’s configuration. You can then create Docker images that contain your customized configuration by using the forgeops build command. This command:

-

Builds Kubernetes manifests based on the Kustomize bases and overlays in your local

forgeopsrepository clone. -

Updates the image defaulter file to specify the customized images, so that the next time you deploy the CDK, your customized images will be used.

See am image and idm image for detailed

information about building customized AM and IDM Docker images.

CDK pods

After deploying the CDK, the following pods run in your namespace:

am-

Runs PingAM.

When AM starts in a CDK deployment, it obtains its configuration from the AM Docker image specified in the image defaulter.

After the

ampod has started, a job is triggered that populates AM’s application store with several agents and OAuth 2.0 client definitions that are used by the CDK. ds-idrepo-0-

The

ds-idrepo-0pod provides directory services for:-

The identity repository shared by AM and IDM

-

The IDM repository

-

The AM application and policy store

-

AM’s Core Token Service

-

idm-

Runs PingIDM.

When IDM starts in a CDK deployment, it obtains its configuration from the IDM Docker image specified in the image defaulter.

In containerized deployments, IDM must retrieve its configuration from the file system and not from the IDM repository. The default values for the

openidm.fileinstall.enabledandopenidm.config.repo.enabledproperties in the CDK’s system.properties file ensure that IDM retrieves its configuration from the file system. Do not override the default values for these properties. - UI pods

-

Several pods provide access to ForgeRock common user interfaces:

-

admin-ui -

end-user-ui -

login-ui

-

Next step

Minikube setup checklist

forgeops repository

Before you can deploy the CDK or the CDM, you must first get the

forgeops repository and check out the release/7.4-20240805 branch:

-

Clone the

forgeopsrepository. For example:$ git clone https://github.com/ForgeRock/forgeops.git

The

forgeopsrepository is a public Git repository. You do not need credentials to clone it. -

Check out the

release/7.4-20240805branch:$ cd forgeops $ git checkout

release/7.4-20240805

Depending on your organization’s repository strategy, you might need to

clone the repository from a fork, instead of cloning ForgeRock’s master

repository. You might also need to create a working branch from the

release/7.4-20240805 branch. For more information,

refer to Repository Updates.

Next step

Third-party software

Before performing a demo deployment, you must obtain non-ForgeRock software and install it on your local computer.

The versions listed in this section have been validated for deploying the Ping Identity Platform and building custom Docker images for it. Earlier and later versions will probably work. If you want to try using versions that are not in the tables, it is your responsibility to validate them.

| Software | Version | Homebrew package |

|---|---|---|

Python 3 |

3.11.6 |

|

Bash |

5.2.26 |

|

Docker client |

24.0.6 |

|

Kubernetes client (kubectl) |

1.28.4 |

|

Kubernetes context switcher (kubectx) |

0.9.5 |

|

Kustomize |

5.2.1 |

|

Helm |

3.13.2 |

|

JQ |

1.17 |

|

Minikube |

1.32.0 |

|

Hyperkit |

0.20210107 |

|

Docker engine

In addition to the software listed in the preceding table, you’ll need to start a virtual machine that runs Docker engine before you can use the CDK:

-

On macOS systems, use Docker Desktop or an alternative, such as Colima.

-

On Linux systems, use Docker Desktop for Linux, install Docker machine from your Linux distribution, or use an alternative, such as Colima.

Minimum requirements for the virtual machine:

-

4 CPUs

-

10 GB RAM

-

60 GB disk space

Next step

Minikube cluster

Minikube software runs a single-node Kubernetes cluster in a virtual machine.

The cluster/minikube/cdk-minikube start command creates a Minikube cluster with a configuration that’s adequate for a CDK deployment.

-

Determine which virtual machine driver you want Minikube to use. By default, the cdk-minikube command, which you run in the next step, starts Minikube with:

-

The Hyperkit driver on Intel x86-based macOS systems

-

The Docker driver on ARM-based macOS systems’[3]'

-

The Docker driver on Linux systems

The default driver option is fine for most users. For more information about Minikube virtual machine drivers, refer to Drivers in the Minikube documentation.

If you want to use a driver other than the default driver, specify the

--driveroption when you run the cdk-minikube command in the next step. -

-

Set up Minikube:

$ cd /path/to/forgeops/cluster/minikube $ ./cdk-minikube start Running: "minikube start --cpus=3 --memory=9g --disk-size=40g --cni=true --kubernetes-version=stable --addons=ingress,volumesnapshots,metrics-server --driver=hyperkit" 😄 minikube v1.32.0 on Darwin 13.6 ✨ Using the hyperkit driver based on user configuration 💿 Downloading VM boot image … > minikube-v1.32.1-amd64.iso….: 65 B / 65 B [---------] 100.00% ? p/s 0s > minikube-v1.32.1-amd64.iso: 292.96 MiB / 292.96 MiB 100.00% 6.66 MiB p/ 👍 Starting control plane node minikube in cluster minikube 💾 Downloading Kubernetes v1.28.3 preload … > preloaded-images-k8s-v18-v1…: 403.35 MiB / 403.35 MiB 100.00% 8.60 Mi 🔥 Creating hyperkit VM (CPUs=3, Memory=9216MB, Disk=40960MB) … 🐳 Preparing Kubernetes v1.28.3 on Docker 24.0.7 … ▪ Generating certificates and keys … ▪ Booting up control plane … ▪ Configuring RBAC rules … 🔗 Configuring CNI (Container Networking Interface) … 🔎 Verifying Kubernetes components… ▪ Using image registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20231011-8b53cabe0 ▪ Using image registry.k8s.io/sig-storage/snapshot-controller:v6.1.0 ▪ Using image registry.k8s.io/ingress-nginx/controller:v1.9.4 ▪ Using image registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20231011-8b53cabe0 ▪ Using image registry.k8s.io/metrics-server/metrics-server:v0.6.4 ▪ Using image gcr.io/k8s-minikube/storage-provisioner:v5 🔎 Verifying ingress addon… 🌟 Enabled addons: storage-provisioner, metrics-server, default-storageclass, volumesnapshots, ingress 🏄 Done! kubectl is now configured to use "minikube" cluster and "default" namespace by default -

Verify that your Minikube cluster is using the expected driver. For example:

Running: "minikube start --cpus=3 --memory=9g --disk-size=40g --cni=true --kubernetes-version=stable --addons=ingress,volumesnapshots --driver=hyperkit" 😄 minikube v1.32.0 on Darwin 13.6 ✨ Using the hyperkit driver based on user configuration ...

If you are running Minikube on an ARM-based macOS system and the cdk-minikube output indicates that you are using the qemu driver, you probably have not started the virtual machine that runs your Docker engine.

Next step

Namespace

Create a namespace in your new cluster.

ForgeRock recommends that you deploy the Ping Identity Platform in a namespace other than the default namespace. Deploying to a non-default namespace lets you separate workloads in a cluster. Separating a workload into a namespace lets you delete the workload easily; just delete the namespace.

To create a namespace:

-

Create a namespace in your Kubernetes cluster:

$ kubectl create namespace my-namespace namespace/my-namespace created

-

Make the new namespace the active namespace in your local Kubernetes context. For example:

$ kubens my-namespace Context "minikube" modified. Active namespace is "my-namespace".

Next step

Hostname Resolution

Set up hostname resolution for the Ping Identity Platform servers you’ll deploy in your namespace:

-

Determine the Minikube ingress controller’s IP address:

-

If Minikube is running on an ARM-based macOS system’[3]' , use

127.0.0.1as the IP address. -

If Minikube is running on an x86-based macOS system or on a Linux system, get the IP address by running the minikube ip command:

$ minikube ip 192.168.64.2

-

-

Choose an FQDN (referred to as the deployment FQDN) that you’ll use when you deploy the Ping Identity Platform, and when you access its GUIs and REST APIs. Ensure that the FQDN is unique in the cluster you will be deploying the Ping Identity Platform.

Examples in this documentation use

cdk.example.comas the CDK deployment FQDN. You are not required to usecdk.example.com; you can specify any FQDN you like. -

Add an entry to the /etc/hosts file to resolve the deployment FQDN:

ingress-ip-address cdk.example.comFor

ingress-ip-address, specify the IP address from step 1.

Next step

GKE setup checklist

forgeops repository

Before you can deploy the CDK or the CDM, you must first get the

forgeops repository and check out the release/7.4-20240805 branch:

-

Clone the

forgeopsrepository. For example:$ git clone https://github.com/ForgeRock/forgeops.git

The

forgeopsrepository is a public Git repository. You do not need credentials to clone it. -

Check out the

release/7.4-20240805branch:$ cd forgeops $ git checkout

release/7.4-20240805

Depending on your organization’s repository strategy, you might need to

clone the repository from a fork, instead of cloning ForgeRock’s master

repository. You might also need to create a working branch from the

release/7.4-20240805 branch. For more information,

refer to Repository Updates.

Next step

Third-party software

Before performing a demo deployment, you must obtain non-ForgeRock software and install it on your local computer.

The versions listed in the following tables have been validated for deploying the Ping Identity Platform and building custom Docker images for it. Earlier and later versions will probably work. If you want to try using versions that are not in the tables, it is your responsibility to validate them.

Install the following third-party software:

| Software | Version | Homebrew package |

|---|---|---|

Python 3 |

3.11.6 |

|

Bash |

5.2.26 |

|

Docker client |

24.0.6 |

|

Kubernetes client (kubectl) |

1.28.4 |

|

Kubernetes context switcher (kubectx) |

0.9.5 |

|

Kustomize |

5.2.1 |

|

Helm |

3.13.2 |

|

JQ |

1.17 |

|

Google Cloud SDK |

451.0.1 |

|

Docker engine

In addition to the software listed in the preceding table, you’ll need to start a virtual machine that runs Docker engine before you can use the CDK:

-

On macOS systems, use Docker Desktop or an alternative, such as Colima.

-

On Linux systems, use Docker Desktop for Linux, install Docker machine from your Linux distribution, or use an alternative, such as Colima.

The default configuration for a Docker virtual machine provides adequate resources for the CDK.

Next step

Cluster details

You’ll need to get some information about the cluster from your cluster administrator. You’ll provide this information as you perform various tasks to access the cluster.

Obtain the following cluster details:

-

The name of the Google Cloud project that contains the cluster.

-

The cluster name.

-

The Google Cloud zone in which the cluster resides.

-

The external IP address of your cluster’s ingress controller.

-

The location of the Docker registry from which your cluster will obtain images for the Ping Identity Platform.

Next step

Context for the shared cluster

Kubernetes uses contexts to access Kubernetes clusters. Before you can access the shared cluster, you must create a context on your local computer if it’s not already present.

To create a context for the shared cluster:

-

Run the kubectx command and review the output. The current Kubernetes context is highlighted:

-

If the current context references the shared cluster, there is nothing further to do. Proceed to Namespace.

-

If the context of the shared cluster is present in the kubectx command output, set the context as follows:

$ kubectx my-context Switched to context "my-context".

After you have set the context, proceed to Namespace.

-

If the context of the shared cluster is not present in the kubectx command output, continue to the next step.

-

-

Configure the gcloud CLI to use your Google account. Run the following command:

$ gcloud auth application-default login

-

A browser window prompts you to log in to Google. Log in using your Google account.

A second screen requests several permissions. Select Allow.

A third screen should appear with the heading, You are now authenticated with the gcloud CLI!

-

Return to the terminal window and run the following command. Use the cluster name, zone, and project name you obtained from your cluster administrator:

$ gcloud container clusters \ get-credentials cluster-name --zone google-zone --project google-project Fetching cluster endpoint and auth data. kubeconfig entry generated for cluster-name.

-

Run the kubectx command again and verify that the context for our Kubernetes cluster is now the current context.

Next step

Namespace

Create a namespace in the shared cluster. Namespaces let you isolate your deployments from other developers' deployments.

ForgeRock recommends that you deploy the Ping Identity Platform in a namespace other

than the default namespace. Deploying to a non-default namespace lets you

separate workloads in a cluster. Separating a workload into a namespace lets you

delete the workload easily; just delete the namespace.

To create a namespace:

-

Create a namespace in your Kubernetes cluster:

$ kubectl create namespace my-namespace namespace/my-namespace created -

Make the new namespace the active namespace in your local Kubernetes context. For example:

$ kubens my-namespace Context "my-context" modified. Active namespace is "my-namespace".

Next step

Hostname resolution

You may need to set up hostname resolution for the Ping Identity Platform servers you’ll deploy in your namespace:

-

Choose an FQDN (referred to as the deployment FQDN) that you’ll use when you deploy the Ping Identity Platform and when you access its GUIs and REST APIs. Ensure that the FQDN is unique in the cluster you will be deploying the Ping Identity Platform.

Examples in this documentation use

cdk.example.comas the CDK deployment FQDN. You are not required to usecdk.example.com; you can specify any FQDN you like. -

If DNS does not resolve your deployment FQDN, add an entry to the /etc/hosts file that maps the external IP address of your cluster’s ingress controller to the deployment FQDN. For example:

ingress-ip-address cdk.example.comFor

ingress-ip-address, specify the external IP address of your cluster’s ingress controller that you obtained from your cluster administrator.

Next step

Amazon EKS setup checklist

forgeops repository

Before you can deploy the CDK or the CDM, you must first get the

forgeops repository and check out the release/7.4-20240805 branch:

-

Clone the

forgeopsrepository. For example:$ git clone https://github.com/ForgeRock/forgeops.git

The

forgeopsrepository is a public Git repository. You do not need credentials to clone it. -

Check out the

release/7.4-20240805branch:$ cd forgeops $ git checkout

release/7.4-20240805

Depending on your organization’s repository strategy, you might need to

clone the repository from a fork, instead of cloning ForgeRock’s master

repository. You might also need to create a working branch from the

release/7.4-20240805 branch. For more information,

refer to Repository Updates.

Next step

Third-party software

Before performing a demo deployment, you must obtain non-ForgeRock software and install it on your local computer.

The versions listed in the following tables have been validated for deploying the Ping Identity Platform and building custom Docker images for it. Earlier and later versions will probably work. If you want to try using versions that are not in the tables, it is your responsibility to validate them.

Install the following third-party software:

| Software | Version | Homebrew package |

|---|---|---|

Python 3 |

3.11.6 |

|

Bash |

5.2.26 |

|

Docker client |

24.0.6 |

|

Kubernetes client (kubectl) |

1.28.4 |

|

Kubernetes context switcher (kubectx) |

0.9.5 |

|

Kustomize |

5.2.1 |

|

Helm |

3.13.2 |

|

JQ |

1.17 |

|

Amazon AWS Command Line Interface |

2.14.5 |

|

AWS IAM Authenticator for Kubernetes |

0.6.13 |

|

Six (Python compatibility library) |

1.16.0 |

|

Docker engine

In addition to the software listed in the preceding table, you’ll need to start a virtual machine that runs Docker engine before you can use the CDK:

-

On macOS systems, use Docker Desktop or an alternative, such as Colima.

-

On Linux systems, use Docker Desktop for Linux, install Docker machine from your Linux distribution, or use an alternative, such as Colima.

The default configuration for a Docker virtual machine provides adequate resources for the CDK.

Next step

Cluster details

You’ll need to get some information about the cluster from your cluster administrator. You’ll provide this information as you perform various tasks to access the cluster.

Obtain the following cluster details:

-

Your AWS access key ID.

-

Your AWS secret access key.

-

The AWS region in which the cluster resides.

-

The cluster name.

-

The external IP address of your cluster’s ingress controller.

-

The location of the Docker registry from which your cluster will obtain images for the Ping Identity Platform.

Next step

Context for the shared cluster

Kubernetes uses contexts to access Kubernetes clusters. Before you can access the shared cluster, you must create a context on your local computer if it’s not already present.

To create a context for the shared cluster:

-

Run the kubectx command and review the output. The current Kubernetes context is highlighted:

-

If the current context references the shared cluster, there is nothing further to do. Proceed to Namespace.

-

If the context of the shared cluster is present in the kubectx command output, set the context as follows:

$ kubectx my-context Switched to context my-context.

After you have set the context, proceed to Namespace.

-

If the context of the shared cluster is not present in the kubectx command output, continue to the next step.

-

-

Run the aws configure command. This command logs you in to AWS and sets the AWS region. Use the access key ID, secret access key, and region you obtained from your cluster administrator. You do not need to specify a value for the default output format:

$ aws configure AWS Access Key ID [None]: AWS Secret Access Key [None]: Default region name [None]: Default output format [None]:

-

Run the following command. Use the cluster name you obtained from your cluster administrator:

$ aws eks update-kubeconfig --name my-cluster Added new context arn:aws:eks:us-east-1:813759318741:cluster/my-cluster to /Users/my-user-name/.kube/config

-

Run the kubectx command again and verify that the context for your Kubernetes cluster is now the current context.

In Amazon EKS environments, the cluster owner must grant access to a user before the user can access cluster resources. For details about how the cluster owner can grant you access to the cluster, refer the cluster owner to Cluster access for multiple AWS users.

Next step

Namespace

Create a namespace in the shared cluster. Namespaces let you isolate your deployments from other developers' deployments.

ForgeRock recommends that you deploy the Ping Identity Platform in a namespace other

than the default namespace. Deploying to a non-default namespace lets you

separate workloads in a cluster. Separating a workload into a namespace lets you

delete the workload easily; just delete the namespace.

To create a namespace:

-

Create a namespace in your Kubernetes cluster:

$ kubectl create namespace my-namespace namespace/my-namespace created

-

Make the new namespace the active namespace in your local Kubernetes context. For example:

$ kubens my-namespace Context "my-context" modified. Active namespace is "my-namespace".

Next step

Hostname resolution

You may need to set up hostname resolution for the Ping Identity Platform servers you’ll deploy in your namespace:

-

Choose an FQDN (referred to as the deployment FQDN) that you’ll use when you deploy the Ping Identity Platform and when you access its GUIs and REST APIs. Ensure that the FQDN is unique in the cluster you will be deploying the Ping Identity Platform.

Examples in this documentation use

cdk.example.comas the CDK deployment FQDN. You are not required to usecdk.example.com; you can specify any FQDN you like. -

If DNS does not resolve your deployment FQDN, add an entry to the /etc/hosts file that maps the external IP address of your cluster’s ingress controller to the deployment FQDN. For example:

ingress-ip-address cdk.example.comFor

ingress-ip-address, specify the external IP address of your cluster’s ingress controller that you obtained from your cluster administrator.

Next step

AKS setup checklist

forgeops repository

Before you can deploy the CDK or the CDM, you must first get the

forgeops repository and check out the release/7.4-20240805 branch:

-

Clone the

forgeopsrepository. For example:$ git clone https://github.com/ForgeRock/forgeops.git

The

forgeopsrepository is a public Git repository. You do not need credentials to clone it. -

Check out the

release/7.4-20240805branch:$ cd forgeops $ git checkout

release/7.4-20240805

Depending on your organization’s repository strategy, you might need to

clone the repository from a fork, instead of cloning ForgeRock’s master

repository. You might also need to create a working branch from the

release/7.4-20240805 branch. For more information,

refer to Repository Updates.

Next step

Third-party software

Before performing a demo deployment, you must obtain non-ForgeRock software and install it on your local computer.

The versions listed in the following tables have been validated for deploying the Ping Identity Platform and building custom Docker images for it. Earlier and later versions will probably work. If you want to try using versions that are not in the tables, it is your responsibility to validate them.

Install the following third-party software:

| Software | Version | Homebrew package |

|---|---|---|

Python 3 |

3.11.6 |

|

Bash |

5.2.26 |

|

Docker client |

24.0.6 |

|

Kubernetes client (kubectl) |

1.28.4 |

|

Kubernetes context switcher (kubectx) |

0.9.5 |

|

Kustomize |

5.2.1 |

|

Helm |

3.13.2 |

|

JQ |

1.17 |

|

Azure Command Line Interface |

2.55.0 |

|

Docker engine

In addition to the software listed in the preceding table, you’ll need to start a virtual machine that runs Docker engine before you can use the CDK:

-

On macOS systems, use Docker Desktop or an alternative, such as Colima.

-

On Linux systems, use Docker Desktop for Linux, install Docker machine from your Linux distribution, or use an alternative, such as Colima.

The default configuration for a Docker virtual machine provides adequate resources for the CDK.

Next step

Cluster details

You’ll need to get some information about the cluster from your cluster administrator. You’ll provide this information as you perform various tasks to access the cluster.

Obtain the following cluster details:

-

The ID of the Azure subscription that contains the cluster. Be sure to obtain the hexadecimal subscription ID, not the subscription name.

-

The name of the resource group that contains the cluster.

-

The cluster name.

-

The external IP address of your cluster’s ingress controller.

-

The location of the Docker registry from which your cluster will obtain images for the Ping Identity Platform.

Next step

Context for the shared cluster

Kubernetes uses contexts to access Kubernetes clusters. Before you can access the shared cluster, you must create a context on your local computer if it’s not already present.

To create a context for the shared cluster:

-

Run the kubectx command and review the output. The current Kubernetes context is highlighted:

-

If the current context references the shared cluster, there is nothing further to do. Proceed to Namespace.

-

If the context of the shared cluster is present in the kubectx command output, set the context as follows:

$ kubectx my-context Switched to context "my-context".

After you have set the context, proceed to Namespace.

-

If the context of the shared cluster is not present in the kubectx command output, continue to the next step.

-

-

Configure the Azure CLI to use your Microsoft Azure account. Run the following command:

$ az login

-

A browser window prompts you to log in to Azure. Log in using your Microsoft account.

A second screen should appear with the message, "You have logged into Microsoft Azure!"

-

Return to the terminal window and run the following command. Use the resource group, cluster name, and subscription ID you obtained from your cluster administrator:

$ az aks get-credentials \ --resource-group my-fr-resource-group \ --name my-fr-cluster \ --subscription my-hex-azure-subscription-ID \ --overwrite-existing

-

Run the kubectx command again and verify that the context for your Kubernetes cluster is now the current context.

Next step

Namespace

Create a namespace in the shared cluster. Namespaces let you isolate your deployments from other developers' deployments.

ForgeRock recommends that you deploy the Ping Identity Platform in a namespace other than the default namespace. Deploying to a non-default namespace lets you separate workloads in a cluster. Separating a workload into a namespace lets you delete the workload easily; just delete the namespace.

To create a namespace:

-

Create a namespace in your Kubernetes cluster:

$ kubectl create namespace my-namespace namespace/my-namespace created

-

Make the new namespace the active namespace in your local Kubernetes context. For example:

$ kubens my-namespace Context "my-context" modified. Active namespace is "my-namespace".

Next step

Hostname resolution

You may need to set up hostname resolution for the Ping Identity Platform servers you’ll deploy in your namespace:

-

Choose an FQDN (referred to as the deployment FQDN) that you’ll use when you deploy the Ping Identity Platform and when you access its GUIs and REST APIs. Ensure that the FQDN is unique in the cluster you will be deploying the Ping Identity Platform.

Examples in this documentation use

cdk.example.comas the CDK deployment FQDN. You are not required to usecdk.example.com; you can specify any FQDN you like. -

If DNS does not resolve your deployment FQDN, add an entry to the /etc/hosts file that maps the external IP address of your cluster’s ingress controller to the deployment FQDN. For example:

ingress-ip-address cdk.example.comFor

ingress-ip-address, specify the external IP address of your cluster’s ingress controller that you obtained from your cluster administrator.

Next step

CDK deployment

After you’ve set up your environment, deploy the CDK:

-

Set the active namespace in your local Kubernetes context to the namespace that you created when you performed the setup task.

-

-

Use the forgeops command

-

Use Helm (technology preview)

$ cd /path/to/forgeops/bin $ ./forgeops install --cdk --fqdn cdk.example.com

By default, the forgeops install --cdk command uses the ForgeOps-provided Docker images for ForgeOps 7.4 release, available from the public registry However, if you’ve built custom images for the Ping Identity Platform, the forgeops install --cdk command uses your custom images.

If you prefer not to deploy the CDK using a single forgeops install command, refer to Alternative deployment techniques for more information.

The forgeops install command does not use the

kustomization.yamlfile during deployment. Therefore, any configuration changes you incorporate in thekustomization.yamlfile will not be used by the forgeops install command.-

On Minikube

$ cd /path/to/forgeops/charts/scripts $ ./install-prereqs $ cd ../identity-platform $ helm upgrade identity-platform \ oci://us-docker.pkg.dev/forgeops-public/charts/identity-platform \ --install --version 7.4 --namespace my-namespace \ --set 'ds_idrepo.volumeClaimSpec.storageClassName=standard' \ --set 'ds_cts.volumeClaimSpec.storageClassName=standard' \ --set 'platform.ingress.hosts={cdk.example.com}'When deploying the platform with Docker images other than the public evaluation-only images, you’ll also need to set additional Helm values such as

am.image.repository,am.image.tag,idm.image.repository, andidm.image.tag. For an example, refer to Redeploy AM: Helm installations (technology preview).ForgeRock only offers ForgeRock software or services to legal entities that have entered into a binding license agreement with ForgeRock. When you install ForgeRock’s Docker images, you agree either that: 1) you are an authorized user of a ForgeRock customer that has entered into a license agreement with ForgeRock governing your use of the ForgeRock software; or 2) your use of the ForgeRock software is subject to the ForgeRock Subscription License Agreement.

-

-

In a separate terminal tab or window, run the kubectl get pods command to monitor status of the deployment. Wait until all the pods are ready.

Your namespace should have the pods shown in this diagram.

-

Perform this step only if you are running Minikube on an ARM-based macOS system’[3]' :

In a separate terminal tab or window, run the minikube tunnel command, and enter your system’s superuser password when prompted:

$ minikube tunnel ✅ Tunnel successfully started 📌 NOTE: Please do not close this terminal as this process must stay alive for the tunnel to be accessible … ❗ The service/ingress forgerock requires privileged ports to be exposed: [80 443] 🔑 sudo permission will be asked for it. ❗ The service/ingress ig requires privileged ports to be exposed: [80 443] 🏃 Starting tunnel for service forgerock. 🔑 sudo permission will be asked for it. 🏃 Starting tunnel for service ig. Password:

The tunnel creates networking that lets you access the Minikube cluster’s ingress on the localhost IP address (127.0.0.1). Leave the tab or window that started the tunnel open for as long as you run the CDK.

Refer to this post for an explanation about why a Minikube tunnel is required to access ingress resources when running Minikube on an ARM-based macOS system.

-

(Optional) Install a TLS certificate instead of using the default self-signed certificate in your CDK deployment. See TLS certificate for details.

Alternative deployment techniques

If you prefer not to deploy the CDK using a single forgeops install command, you can use one of these options:

-

Deploy the CDK component by component instead of with a single command. Staging the deployment can be useful if you need to troubleshoot a deployment issue.

-

The forgeops install command generates Kustomize manifests that let you recreate your CDK deployment. The manifests are written to the /path/to/forgeops/kustomize/deploy directory of your

forgeopsrepository clone. Advanced users who prefer to work directly with Kustomize manifests that describe their CDK deployment can use the generated content in the kustomize/deploy directory as an alternative to using the forgeops command:-

Generate an initial set of Kustomize manifests by running the forgeops install command. If you prefer to generate the manifests without installing the CDK, you can run the forgeops generate command.

-

Run kubectl apply -k commands to deploy and remove CDK components. Specify a manifest in the kustomize/deploy directory as an argument when you run kubectl apply -k commands.

-

Use GitOps to manage CDK configuration changes to the kustomize/deploy directory instead of making changes to files in the kustomize/base and kustomize/overlay directories.

-

Next step

UI and API access

Now that you’ve deployed the Ping Identity Platform, you’ll need to know how to access its administration tools. You’ll use these tools to build customized Docker images for the platform.

This page shows you how to access the Ping Identity Platform’s administrative UIs and REST APIs.

You access AM and IDM services through the Kubernetes ingress controller using their admin UIs and REST APIs.

You can’t access DS through the ingress controller, but you can use Kubernetes methods to access the DS pods.

For more information about how AM and IDM are configured in the

CDK, see

Configuration

in the forgeops repository’s top-level README file.

AM services

To access the AM admin UI:

-

Set the active namespace in your local Kubernetes context to the namespace in which you have deployed the CDK.

-

Obtain the

amadminuser’s password:$ cd /path/to/forgeops/bin $ ./forgeops info | grep amadmin 179rd8en9rffa82rcf1qap1z0gv1hcej (amadmin user) -

Open a new window or tab in a web browser.

-

Go to https://cdk.example.com/platform.

The Kubernetes ingress controller handles the request, routing it to the

login-uipod.The login UI prompts you to log in.

-

Log in as the

amadminuser.The Ping Identity Platform admin UI appears in the browser.

-

Select Native Consoles > Access Management.

The AM admin UI appears in the browser.

To access the AM REST APIs:

-

Start a terminal window session.

-

Run a curl command to verify that you can access the REST APIs through the ingress controller. For example:

$ curl \ --insecure \ --request POST \ --header "Content-Type: application/json" \ --header "X-OpenAM-Username: amadmin" \ --header "X-OpenAM-Password: 179rd8en9rffa82rcf1qap1z0gv1hcej" \ --header "Accept-API-Version: resource=2.0" \ --data "{}" \ "https://cdk.example.com/am/json/realms/root/authenticate" { "tokenId":"AQIC5wM2...TU3OQ*", "successUrl":"/am/console", "realm":"/" }

IDM services

To access the IDM admin UI:

-

Set the active namespace in your local Kubernetes context to the namespace in which you have deployed the CDK.

-

Obtain the

amadminuser’s password:$ cd /path/to/forgeops/bin $ ./forgeops info | grep amadmin vr58qt11ihoa31zfbjsdxxrqryfw0s31 (amadmin user) -

Open a new window or tab in a web browser.

-

Go to https://cdk.example.com/platform.

The Kubernetes ingress controller handles the request, routing it to the

login-uipod.The login UI prompts you to log in.

-

Log in as the

amadminuser.The Ping Identity Platform admin UI appears in the browser.

-

Select Native Consoles > Identity Management.

The IDM admin UI appears in the browser.

To access the IDM REST APIs:

-

Start a terminal window session.

-

If you haven’t already done so, get the

amadminuser’s password using the forgeops info command. -

AM authorizes IDM REST API access using the OAuth 2.0 authorization code flow. The CDK comes with the

idm-admin-uiclient, which is configured to let you get a bearer token using this OAuth 2.0 flow. You’ll use the bearer token in the next step to access the IDM REST API:-

Get a session token for the

amadminuser:$ curl \ --request POST \ --insecure \ --header "Content-Type: application/json" \ --header "X-OpenAM-Username: amadmin" \ --header "X-OpenAM-Password: vr58qt11ihoa31zfbjsdxxrqryfw0s31" \ --header "Accept-API-Version: resource=2.0, protocol=1.0" \ "https://cdk.example.com/am/json/realms/root/authenticate" { "tokenId":"AQIC5wM...TU3OQ*", "successUrl":"/am/console", "realm":"/"}

-

Get an authorization code. Specify the ID of the session token that you obtained in the previous step in the

--Cookieparameter:$ curl \ --dump-header - \ --insecure \ --request GET \ --Cookie "iPlanetDirectoryPro=AQIC5wM...TU3OQ*" \ "https://cdk.example.com/am/oauth2/realms/root/authorize?redirect_uri=https://cdk.example.com/platform/appAuthHelperRedirect.html&client_id=idm-admin-ui&scope=openid%20fr:idm:*&response_type=code&state=abc123" HTTP/2 302 server: nginx/1.17.10 date: ... content-length: 0 location: https://cdk.example.com/platform/appAuthHelperRedirect.html ?code=3cItL9G52DIiBdfXRngv2_dAaYM&iss=http://cdk.example.com:80/am/oauth2&state=abc123 &client_id=idm-admin-ui set-cookie: route=1595350461.029.542.7328; Path=/am; Secure; HttpOnly x-frame-options: SAMEORIGIN x-content-type-options: nosniff cache-control: no-store pragma: no-cache set-cookie: OAUTH_REQUEST_ATTRIBUTES=DELETED; Expires=Thu, 01 Jan 1970 00:00:00 GMT; Path=/; HttpOnly; SameSite=none strict-transport-security: max-age=15724800; includeSubDomains x-forgerock-transactionid: ee1f79612f96b84703095ce93f5a5e7b

-

Exchange the authorization code for an access token. Specify the access code that you obtained in the previous step in the

codeURL parameter:$ curl --request POST \ --insecure \ --data "grant_type=authorization_code" \ --data "code=3cItL9G52DIiBdfXRngv2_dAaYM" \ --data "client_id=idm-admin-ui" \ --data "redirect_uri=https://cdk.example.com/platform/appAuthHelperRedirect.html" \ "https://cdk.example.com/am/oauth2/realms/root/access_token" { "access_token":"oPzGzGFY1SeP2RkI-ZqaRQC1cDg", "scope":"openid fr:idm:*", "id_token":"eyJ0eXAiOiJKV ... sO4HYqlQ", "token_type":"Bearer", "expires_in":239 }

-

-

Run a curl command to verify that you can access the

openidm/configREST endpoint through the ingress controller. Use the access token returned in the previous step as the bearer token in the authorization header.The following example command provides information about the IDM configuration:

$ curl \ --insecure \ --request GET \ --header "Authorization: Bearer oPzGzGFY1SeP2RkI-ZqaRQC1cDg" \ --data "{}" \ "https://cdk.example.com/openidm/config" { "_id":"", "configurations": [ { "_id":"ui.context/admin", "pid":"ui.context.4f0cb656-0b92-44e9-a48b-76baddda03ea", "factoryPid":"ui.context" }, ... ] }

DS command-line access

The DS pods in the CDK are not exposed outside of the cluster. If you need to access one of the DS pods, use a standard Kubernetes method:

-